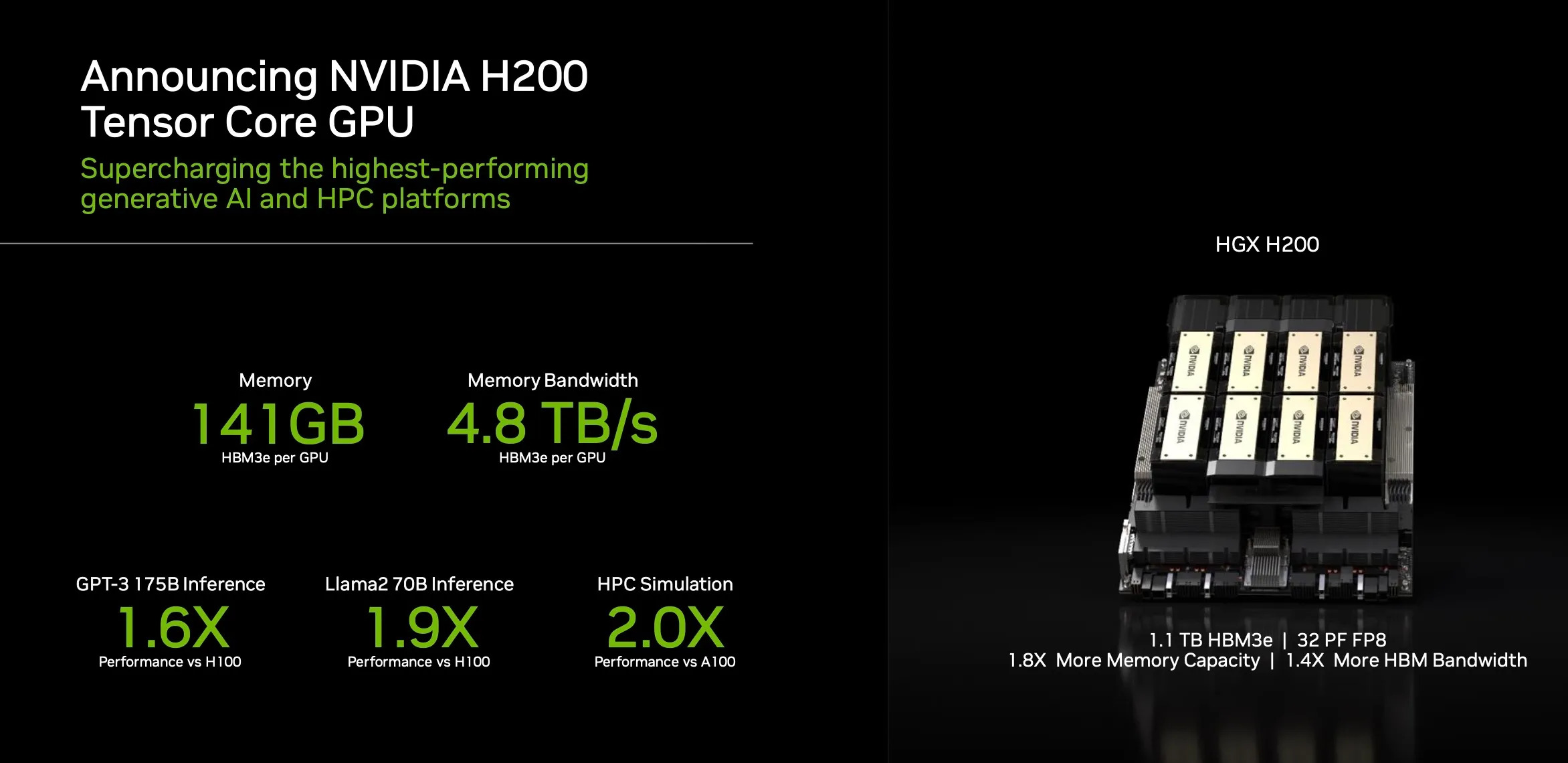

NVIDIA, the leading company in graphics and artificial intelligence, has recently announced its new product: the Hopper H200 GPU. This GPU is a platform for artificial intelligence and high-performance computing, designed to handle large and complex generative AI workloads, such as language models, recommender systems, and vector databases. The Hopper H200 GPU is the first product in the world to use the HBM3e memory technology, which is the latest and fastest memory technology available. The HBM3e memory provides the Hopper H200 GPU with a massive memory capacity of 141GB and a memory bandwidth of 4.8TB/s, enabling it to run larger and more complex AI models than ever before.

The Hopper H200 GPU is based on the Grace Hopper Superchip, which consists of a 72-core Grace CPU and a GH100 compute GPU. The Grace CPU has 480 GB of ECC LPDDR5X memory, and the GH100 GPU has 141 GB of HBM3e memory, which comes in six 24 GB stacks and uses a 6,144-bit memory interface. The Hopper H200 GPU can deliver up to 8 petaflops of AI performance and 10 TB/s of memory bandwidth.

The Hopper H200 GPU can be connected with other Hopper H200 GPUs using the NVIDIA NVLink technology, which allows them to work together and access each other’s memory. This enables the Hopper H200 GPU to scale up to 256 GPUs and provide a shared memory space of 144TB for giant AI models. The Hopper H200 GPU also supports the NVIDIA MGX server specification, which makes it compatible and scalable with various server designs.

NVIDIA’s Hopper H200 GPU is expected to be available in the second quarter of 2024. It is the first product to use the HBM3e memory technology, and it is the most advanced and powerful platform for generative AI and high-performance computing that NVIDIA has ever created. NVIDIA claims that the Hopper H200 GPU can improve the performance of games and applications by up to 200 fps, depending on the scenario and the configuration. NVIDIA has demonstrated this improvement in two games, Rainbow Six Siege and Metro Exodus, which are currently the only games that support the Hopper H200 GPU.

The Hopper H200 GPU is a game-changer for the AI and gaming industry, as it offers unprecedented capabilities and performance for developers and users. However, it also raises some questions and criticisms from the PC gaming community, who wonder why NVIDIA is limiting the compatibility of the Hopper H200 GPU to its 14th generation of processors, codenamed Arrow Lake, which are expected to launch in late 2023 or early 2024. These processors will feature a new technology called Application Optimization (APO), which promises to boost the performance of games and applications by up to 200 fps. However, NVIDIA has also revealed that this feature will not be available for its previous generations of processors, such as the 12th-gen Alder Lake and the 13th-gen Raptor Lake, despite sharing the same architecture as the 14th-gen Arrow Lake. Some experts and enthusiasts have challenged this claim, and have suggested that APO could be enabled for the 12th-gen and 13th-gen processors with some software updates or modifications. They have argued that APO is essentially a software-based technology that does not rely on any specific hardware features, and that NVIDIA is artificially restricting the compatibility of APO to create a marketing advantage for its 14th-gen processors.

NVIDIA has not given a clear explanation for this decision, but it has hinted that APO requires some hardware changes that are not present in the previous generations of processors. NVIDIA has also stated that APO is a feature that is exclusive to its 14th-gen Arrow Lake processors, and that it will not be supported by its 12th-gen Alder Lake and 13th-gen Raptor Lake processors, which are based on the same hybrid architecture as the 14th-gen Arrow Lake.

NVIDIA’s decision to limit the availability of APO to its 14th-gen processors has sparked some controversy and backlash from the PC gaming community, who feel that NVIDIA is neglecting its existing customers and products, and that NVIDIA is trying to force them to upgrade to its latest processors. Some gamers have expressed their frustration and disappointment with NVIDIA, and have accused NVIDIA of being greedy and unfair. Some gamers have also said that they will switch to AMD, NVIDIA’s main rival in the CPU market, which has been offering more competitive and affordable products in recent years.

On the other hand, some gamers have defended NVIDIA’s decision, and have argued that APO is a new and innovative technology that deserves to be exclusive to its 14th-gen processors, and that NVIDIA has the right to decide how to implement and distribute its own technology. Some gamers have also praised NVIDIA for developing APO, and have said that they are looking forward to trying it out on their 14th-gen processors. Some gamers have also pointed out that APO is not a necessity, and that it only works on a few games, and that there are other factors that affect the gaming performance, such as the GPU, the RAM, and the SSD.

NVIDIA’s APO feature is a technology that has the potential to change the game for PC gaming, but it also has the potential to change the game for NVIDIA itself. Whether APO will be a game-changer or a game-limiter for NVIDIA and its customers remains to be seen.

Sources:

• NVIDIA announces H200 AI GPU: up to 141GB of HBM3e memory with 4.8TB/sec bandwidthhttps://www.tweaktown.com/news/94339/nvidia-announces-h200-ai-gpu-up-to-141gb-of-hbm3e-memory-with-4-8tb-sec-bandwidth/index.html

• Nvidia’s H200 GPU ‘emphasizes’ how important memory is for AI: Wells Fargohttps://www.msn.com/en-us/news/technology/nvidias-h200-gpu-emphasizes-how-important-memory-is-for-ai-wells-fargo/ar-AA1jUCMJ

• H200: Nvidia’s latest AI chip with 141GB of super-fast memoryhttps://www.techzine.eu/news/devices/113275/h200-nvidias-latest-ai-chip-with-141gb-of-super-fast-memory/

• Nvidia Reveals GH200 Grace Hopper GPU With 141GB of HBM3ehttps://www.tomshardware.com/news/nvidia-reveals-gh200-grace-hopper-gpu-with-141gb-of-hbm3e

• DGX GH200 for Large Memory AI Supercomputer | NVIDIAhttps://www.nvidia.com/en-us/data-center/dgx-gh200/